You can just pull GGUFs from ollama

So if there’s a new LLM available that’s not yet been published to the main ollama hub (https://ollama.com/library) but does have a GGUF available on huggingface it’s pretty easy to get it running in ollama without having to use llama.cpp or anything like that.

For example when Deepseek R1 came out recently, it was available in Unsloth’s Deepseek collection on Huggingface well before it was available on the Ollama hub. And you can just use those versions in Huggingface directly (credit to this HN post for how to do it).

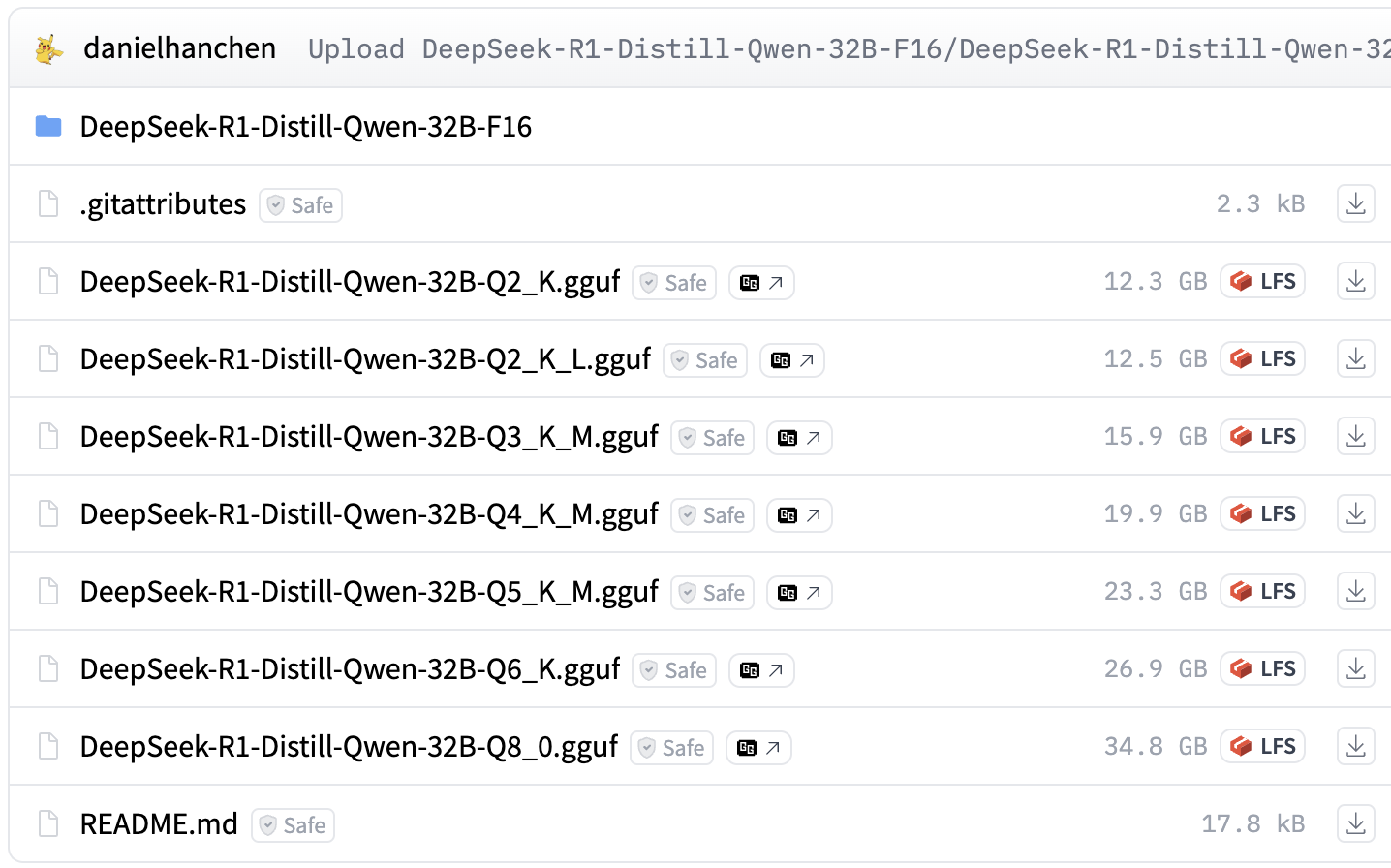

The trick is to use the direct huggingface url for the model (you can use the hf.co/ variant to keep it shorter). For example if you check out the files for this GGUF it might look like this:

If you wanted to grab the Q8 version, the full URL would be: https://huggingface.co/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF/blob/main/DeepSeek-R1-Distill-Qwen-32B-Q8_0.gguf

So you can shorten this down to the following ollama incantation:

ollama run hf.co/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF:32B-Q8_0

The only tricky bit is that the last bit (which quantized variant you want) gets turned into a : and you don’t include the .gguf extension.

Ollama has some machinery under the hood which realized that it needs to download the file from huggingface, and once the download is complete it’s available in your ollama models list just like any standard ollama model.